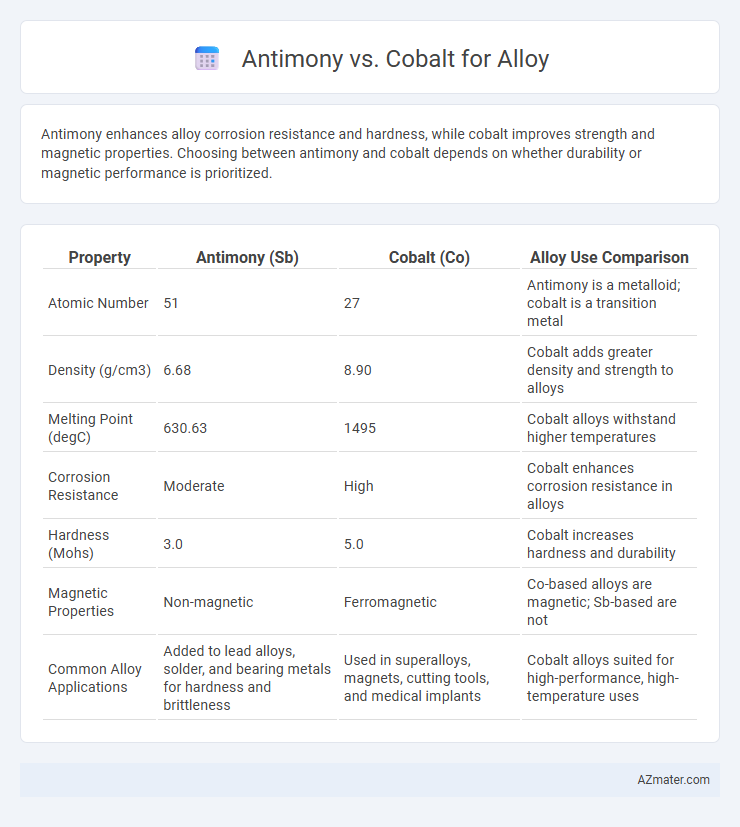

Antimony enhances alloy corrosion resistance and hardness, while cobalt improves strength and magnetic properties. Choosing between antimony and cobalt depends on whether durability or magnetic performance is prioritized.

Table of Comparison

| Property | Antimony (Sb) | Cobalt (Co) | Alloy Use Comparison |

|---|---|---|---|

| Atomic Number | 51 | 27 | Antimony is a metalloid; cobalt is a transition metal |

| Density (g/cm3) | 6.68 | 8.90 | Cobalt adds greater density and strength to alloys |

| Melting Point (degC) | 630.63 | 1495 | Cobalt alloys withstand higher temperatures |

| Corrosion Resistance | Moderate | High | Cobalt enhances corrosion resistance in alloys |

| Hardness (Mohs) | 3.0 | 5.0 | Cobalt increases hardness and durability |

| Magnetic Properties | Non-magnetic | Ferromagnetic | Co-based alloys are magnetic; Sb-based are not |

| Common Alloy Applications | Added to lead alloys, solder, and bearing metals for hardness and brittleness | Used in superalloys, magnets, cutting tools, and medical implants | Cobalt alloys suited for high-performance, high-temperature uses |

Introduction to Antimony and Cobalt Alloys

Antimony and cobalt alloys are widely used in industries requiring specific mechanical and chemical properties. Antimony alloys are primarily valued for their hardness, brittleness, and ability to improve the strength and durability of lead and tin-based materials in applications such as batteries and flame retardants. Cobalt alloys, known for high strength, corrosion resistance, and stability at elevated temperatures, are essential in aerospace, medical implants, and high-performance tooling.

Chemical and Physical Properties Comparison

Antimony is a metalloid with a melting point of 630.6degC and a density of 6.697 g/cm3, offering brittleness and high resistance to oxidation, making it valuable for improving hardness and corrosion resistance in alloys. Cobalt, a transition metal with a melting point of 1495degC and density of 8.90 g/cm3, provides exceptional magnetic properties, high strength, and excellent wear resistance, which enhances the mechanical performance and thermal stability of alloys. The chemical stability and moderate reactivity of antimony contrast with cobalt's ability to form strong metallic bonds, influencing their distinct contributions to alloy durability and electrical conductivity.

Historical Usage in Alloy Production

Antimony has been historically used in alloys to improve hardness and mechanical strength, notably in lead-based alloys for type metal and bullets since ancient times. Cobalt's role in alloy production emerged prominently in the 20th century, where it was valued for enhancing wear resistance and high-temperature stability in superalloys used in aerospace and industrial turbines. The contrasting timelines reflect antimony's early application for durability in basic alloys and cobalt's advancement as a critical element for modern high-performance metal alloys.

Alloying Behavior and Compatibility

Antimony enhances alloy hardness and corrosion resistance by forming stable intermetallic phases, particularly with lead, improving mechanical properties and wear resistance. Cobalt contributes to high-temperature strength and oxidation resistance in alloys, often stabilizing the microstructure in superalloys and enhancing magnetic properties. The compatibility of antimony and cobalt varies; antimony's tendency to form brittle phases can limit its use with cobalt-rich alloys, requiring careful control of composition and processing conditions to optimize alloy performance.

Mechanical Strength and Durability

Antimony enhances alloy hardness and wear resistance by improving grain structure, leading to increased mechanical strength and durability in applications like lead-acid batteries and bearings. Cobalt contributes superior tensile strength and high-temperature stability, making cobalt-containing alloys ideal for aerospace and medical implants requiring exceptional durability under stress. Comparing both, cobalt offers better long-term performance in extreme conditions, while antimony excels in corrosion resistance and hardness for more moderate mechanical requirements.

Corrosion Resistance and Stability

Antimony enhances alloy corrosion resistance by forming a stable, protective oxide layer that prevents further degradation, making it ideal for environments exposed to acidic or oxidizing conditions. Cobalt contributes to alloy stability by improving mechanical strength and resistance to high-temperature oxidation, ensuring prolonged performance under thermal stress. The choice between antimony and cobalt in alloys depends on the specific corrosion challenges and operational temperatures encountered, with antimony favoring chemical resistance and cobalt providing superior thermal stability.

Industrial Applications: Antimony vs Cobalt

Antimony enhances alloy hardness, corrosion resistance, and flame retardance, making it vital in lead-acid batteries, solder, and specialty metal applications. Cobalt offers exceptional wear resistance, high-temperature strength, and magnetic properties, crucial for superalloys in aerospace, cutting tools, and permanent magnets. Industrially, antimony alloys optimize electrical and thermal conductivity, while cobalt alloys excel in extreme environments requiring durability and precision.

Environmental and Health Considerations

Antimony in alloys poses environmental risks due to its toxicity and potential to contaminate water sources during mining and processing, leading to bioaccumulation and adverse health effects such as respiratory issues and skin irritation. Cobalt also presents health concerns, particularly its carcinogenic properties and potential for causing lung and skin diseases in workers exposed to cobalt dust or fumes. Choosing between antimony and cobalt requires careful assessment of their environmental impact and occupational health hazards, with ongoing research focusing on safer handling and alternative materials.

Economic Factors: Availability and Cost

Antimony is less abundant than cobalt, resulting in higher market volatility and supply chain risks that can impact alloy production costs. Cobalt's wider availability, especially as a byproduct of nickel and copper mining, tends to stabilize its price and makes it a more economically viable choice for large-scale alloy manufacturing. Cost considerations often favor cobalt alloys due to more consistent supply and lower price fluctuations compared to antimony-based alternatives.

Future Trends in Alloy Development

Antimony and cobalt are key elements influencing future alloy development due to their unique properties; antimony enhances corrosion resistance and hardness, while cobalt improves thermal stability and magnetic performance. Emerging trends indicate increased use of antimony-cobalt composite alloys in high-performance applications such as aerospace, electronics, and energy storage. Advancements in nanotechnology and additive manufacturing also drive tailored microstructures that optimize the synergistic benefits of both elements, leading to stronger, lighter, and more durable alloys.

Infographic: Antimony vs Cobalt for Alloy

azmater.com

azmater.com